Unexpectedly, the culprit that can bring down a company's website is actually OpenAI's crazy crawler robot-GPTBot.

(GPTBot is a tool launched by OpenAI many years ago to automatically capture data from the entire Internet.)

Just in the past two days, the website of a 7-person team company (Triplegangers) suddenly went down. The CEO and employees rushed to find out what the problem was.

They got shocked when they investigated.

The culprit is OpenAI’s GPTBot.

Judging from the CEO’s description, the OpenAI crawler’s “offensive” is a bit crazy:

"We have over 65,000 products, and each product has a page, and each page also has at least three images.

OpenAI is sending tens of thousands of server requests trying to download everything, including hundreds of thousands of photos and their detailed descriptions. "

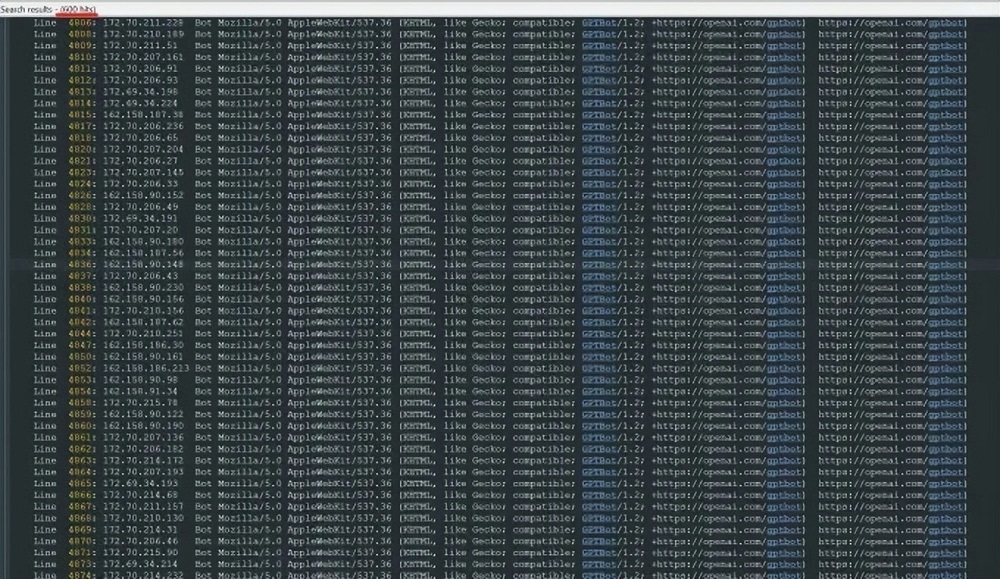

After analyzing the company's logs from last week, the team further discovered that OpenAI used more than 600 IP addresses to scrape data.

A crawler of this scale caused the company's website to crash. The CEO even said helplessly:

"This is basically a DDoS attack."

More importantly, due to OpenAI's crazy crawling, it will also trigger a large amount of CPU usage and data download activities, which will lead to a sharp increase in resource consumption of the website's cloud computing service (AWS), and the cost will increase significantly...

Well, big AI companies are crawling like crazy, but small companies are footing the bill.

The experience of this small team has also triggered discussions among many netizens. Some people think that what GPTBot does is not grabbing, but more like a euphemism for "stealing":

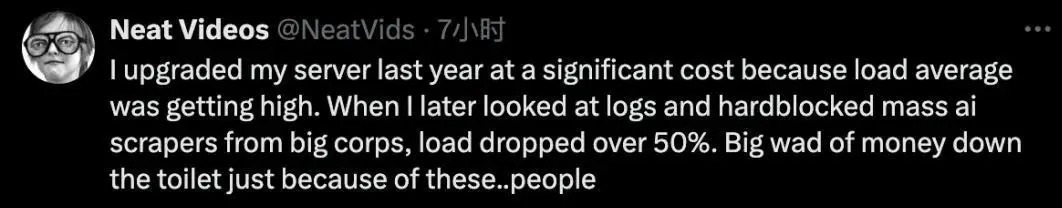

Some netizens also came forward and said that they had similar experiences. Since they blocked the batch AI crawlers of large companies, they have saved a lot of money:

Crashed by crawlers, still don’t know what was crawled away.

So why did OpenAI crawl this startup’s data?

Simply put, its data is indeed of high quality.

It is understood that seven members of Triplegangers spent more than ten years creating what is known as the largest "human digital twin" database

The website contains 3D image files scanned from actual human models, and the photos are tagged with detailed information covering race, age, tattoos and scars, various body types, and more.

This is undoubtedly of great value to 3D artists, game makers, etc. who need to digitally reproduce real human characteristics.

Although there is a terms of service page on the Triplegangers website, it clearly prohibits unauthorized AI from grabbing pictures of their website.

But judging from the current results, this has no effect at all.

The point is that Triplegangers does not configure a file correctly - robots.txt.

Robots.txt, also known as the Robot Exclusion Protocol, was created to tell search engines what content a website should not crawl when indexing the web.

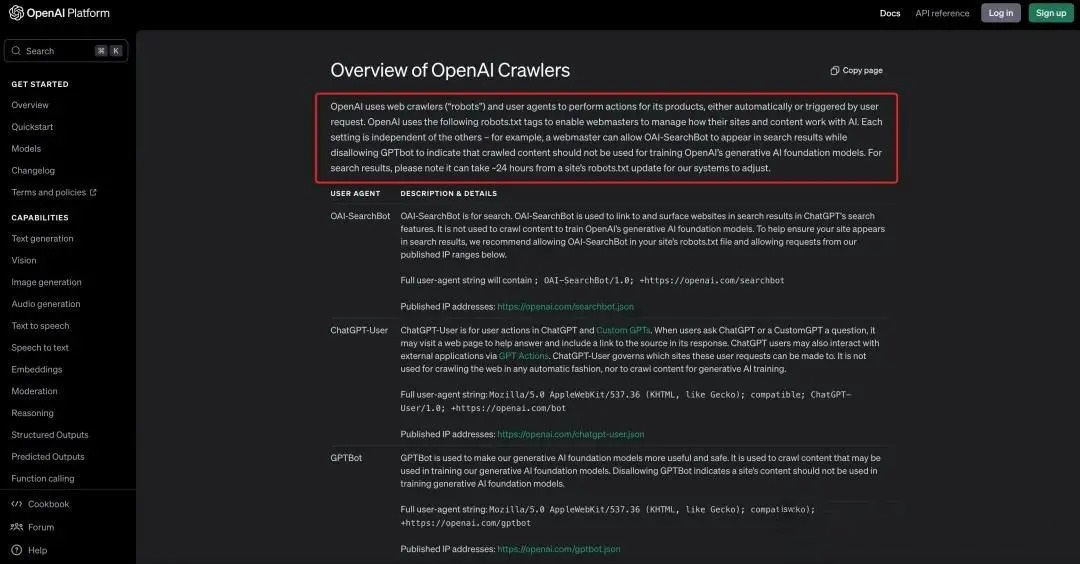

In other words, if a website does not want to be crawled by OpenAI, it must correctly configure the robots.txt file with a specific label to clearly tell GPTBot not to access the website.

But in addition to GPTBot, OpenAI also has ChatGPT-User and OAI-SearchBot, which also have their own corresponding tags:

And according to the official crawler information released by OpenAI, even if you set the robots.txt file correctly, it will not take effect immediately.

Because it may take 24 hours for OpenAI recognition to update this file...

The CEO brother said:

"If a website doesn't have the robots.txt file configured correctly, then OpenAI and other companies will think they can crawl the content as they please.

This is not an optional system. "

Because of this, Triplegangers' website was downed during working hours, and they were charged high AWS fees.

As of Wednesday ET, Triplegangers had the correct robots.txt file configured as required.

Just in case, the team also set up a Cloudflare account to block other AI crawlers such as Barkrowler and Bytespider.

Although Triplegangers had no more downtime when work started on Thursday, the CEO still had an unresolved problem——

Don’t know what data OpenAI crawled from the website, can not contact OpenAI either...

And what makes the CEO brother even more worried is:

"If GPTBot hadn't been so greedy that it brought our website down, we might not have known it had been scraping our data.

There are bugs in this process. Even though you big AI company said you can configure robots.txt to prevent crawlers, you put the responsibility on us. "

Finally, the CEO also called on many online companies to proactively and actively look for problems if they want to prevent large companies from crawling without permission.

It's not the first case

While Triplegangers is not the first company to suffer outages due to OpenAI’s crazy crawlers.

Before that, there was the company Game UI Database.

It contains an online database of over 56,000 game user interface screenshots for reference by game designers.

One day, the team discovered that the website was loading slower, page loading times were three times longer, users frequently encountered 502 errors, and the homepage was reloaded 200 times per second.

They thought it was a DDoS attack at first, but when they checked the logs... it was OpenAI, querying it twice per second, which almost paralyzed the website.

But do you think OpenAI is the only one with such crazy crawlers?

No, no.

For example, Anthropic has been exposed to similar things before.

Joshua Gross, founder of digital product studio Planetary, once said that after the website they redesigned for a client was launched, traffic surged, causing the client's cloud costs to double.

After auditing, it was found that a large amount of traffic came from crawling robots, mainly meaningless traffic caused by Anthropic, and a large number of requests returned 404 errors.

In response to this phenomenon, a new study from digital advertising company DoubleVerify shows that AI crawlers will lead to an 86% increase in "general invalid traffic" (traffic not from real users) in 2024.

So why do AI companies, especially large model companies, "suck" data on the internet so crazily?

In a word, they are too short of high-quality data for training.

Some studies have estimated that the world's available AI training data may be exhausted by 2032, which has allowed AI companies to speed up data collection.

Because of this, in order to obtain more "exclusive" videos for AI training, AI companies such as OpenAI and Google are now paying heavily to UP owners for those "never-before-published" videos.

And even the price is marked. If it is an unpublished video prepared for YouTube, Instagram and TikTok, the bid is 1~2 US dollars per minute (generally 1~4 US dollars overall), and the price depends on the quality and format of the video. It can rise further.